Welcome to part 4 of this miniseries on the concept of Environment as a Service. As discussed in part one, an environment comprises everything that is needed to run an application and, in a kubernetes-centric platform, it starts with the provisioning of a namespace.

Sometimes, though, we need components and configurations to exist outside of our namespace for our applications to run properly.

These external configurations may involve everything from external global load balancers, external firewalls, provisioning of certificates from external PKI’s, and more… just to name a few. Sometimes, though, we need an entire set of assets from these external dependencies, such as databases, messaging etc..

In this article, we will explore how tenants of our platform can self-service these external dependencies using the same gitops-based approaches discussed in part three of this series.

When the external dependencies are secured resources, we also need a way to bring the associated credentials to our applications. In part two of this series, we discussed how this process can be facilitated with static credentials. In this article, we will improve upon that initial setup and see how to provision narrowly-scoped (least privilege) and short-lived credentials (a.k.a. dynamic credentials). This approach greatly increases the security posture of our platform.

External resources

We are used to having operators configure different aspects within a Kubernetes cluster. But, the same declarative approach can be used to configure infrastructure that resides outside of the cluster.

Popular Kubernetes operators including cert-manager and external-dns, which can be used to respectively provision certificates from an external PKI and configure external DNS providers, have established this pattern years ago.

More operators of the same type have emerged over time, but Crossplane captures this approach quite well. Crossplane aims at providing full coverage of the major cloud provider API’s. In addition, its robust plugin system makes it easy to add additional coverage for any resource that can be accessed via an API (see the Upboud marketplace for the full list of plugins).

Crossplane is also a framework that provides the ability to bundle together resources, known as a Composite (comparable with Helm Charts), to create a new concept that becomes a first class citizen in Kubernetes. The result is a new Custom Resource Definition (CRD). For example, one such use case of a Composite could represent an RDS postgresql database which contains all the AWS resources needed to establish and configure an instance.

Once a Composite is defined, Crosslane allows you to define a Claim for it. This represents the resource that tenants can use to request the provisioning of the composite (the RDS database in our example). Claims hide the complexity of the composite as well as not providing the tenant access to the highly-privileged credentials needed to provision the composite.

Finally, when provisioned resources return credentials (in our example, the database credentials), Crossplane gives you the ability to deposit such credentials in a variety of credentials stores including Kubernetes or HashiCorp Vault secrets.

While root credentials have been provisioned, it is recommended that tenants not use these elevated credentials and instead use short-lived and narrowly-scoped credentials to access these external resources. Refer to the article that describes approaches for provisioning short-lived and narrowly-scoped credentials. This article will implement several of these concepts.

High-level architecture

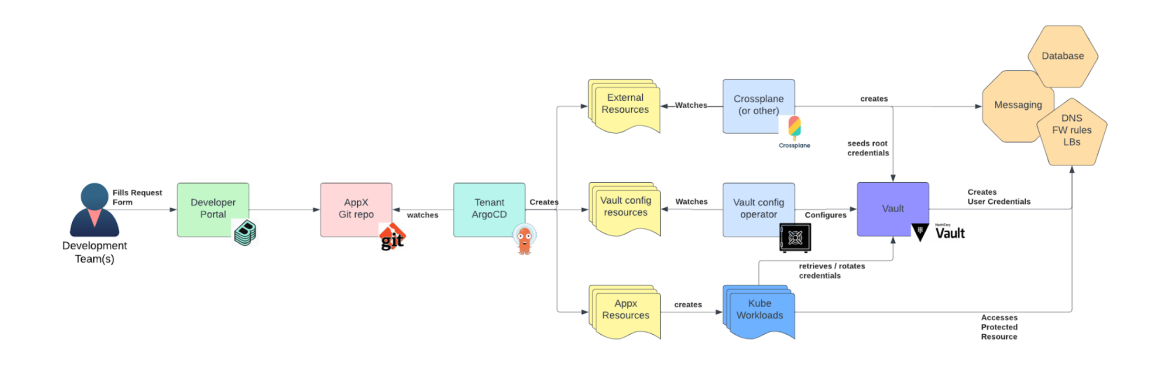

The diagram below depicts a high-level architecture of the pattern that will be implemented for our external resources use case:

Moving from left to right, we can see that members of a developer team can interact with a developer portal and fill out a form to request a new external resource. The developer portal can be implemented using a variety of tools. But, in our case, we will use Red Hat Developer Hub (RHDH). Alternatively, developers could make a pull request to their GitOps repository to achieve the same results ( part three of this series covers this use case).

The following describes the actions that occur as part of the pull request process:

- The pull request contains a Crossplane Claim to create the external resource.

- Once ArgoCD deploys the Claim, the Crossplane operator will instantiate its corresponding Composite and start deploying the set of external configurations needed to provision the external resource.

- As part of the deployment process, if the resource requires authentication for access, root credentials will be returned. Crossplane stores these root credentials within Vault.

- The Composite contains manifests aimed at setting up additional configuration in Vault, in particular to configure a database connection and relative role(s). These manifests make use of the previously returned root credentials. The database connection is configured at the previously created database secret engine mount path. As we discussed above, we create one on this per tenant namespace.

- Tenant deployed applications can now create dynamic credentials by requesting credentials to the desired database secret engine role (we have one role in our example).

Implementation walkthrough

The following implementation describes an example pattern that is built from the concepts described previously. It will make use of an AWS environment, and, in particular, provision a PostgreSQL RDS Database. A repository containing these assets can be found here.

If you recall from part two of this series, every tenant gets a path in Vault in which they can store static secrets. Following the same principle, every tenant will be given a path in Vault in which database secrets can be retrieved using the path pattern: applications/<team>/<namespace>/databases.

We are giving tenants this path (in Vault terminology, it is a database secret engine mount) regardless of whether they requested it or not. This simplifies the process and is cheap in Vault. The definition for this configuration can be found here.In addition, since database secret engines support multiple connections, this setup can also apply if the application requires access to more than one database.

With that process complete, the next step involves the deployment of the Crossplane operators and providers to every cluster starting from the Crossplane operator and the secret store extension that can store secrets in Vault. The definitions are located here.

Next, we can deploy all of the Crossplane providers that we need for our setup. A provider is a small operator that covers specific APIs. Because cloud providers have hundreds of APIs, in Crossplane cloud provider APIs have been segmented in several Crossplane providers.

In our case we need the aws rds provider and the Kubernetes provider (we need some plain Kubernetes object in the Composite). The configurations for these providers are located here.

The next step is to configure the providers. This is where we pass the necessary credentials to the providers along with configuring them to use Vault as their secret store. The definitions can be found here.

We also need to create a place in Vault for Crossplane to deposit the root credentials associated with the external resource. The path pattern that was chosen follows the pattern infra/clusters/<cluster-name>/crossplane-secrets.

The configuration with the Secret Engine mount and relative permissions necessary for Crosslane is defined here.

With Crossplane configured, the next step involves defining the Composite representing the external PostgreSQL database. The definition can be found here. Notice that this definition is aimed at supporting this specific use case and is not a sophisticated or production ready Composite definition.

Note that this composition comprises the RDS instance itself, a subnet group (with hardcoded subnets to simplify the configuration for this walkthrough [avoid this type of logic within your own implementation] ) and the Vault configuration manifests necessary for creating a database Secret Engine configuration (a.k.a connection) and role. Database connection and role are configured at a per-namespace-defined database secret engine mount which is defined here. Ensure that your Vault instance can reach the RDS instance so that it can properly generate the associated credentials.

With all of the configurations in place, let’s provision a database.

In the following example, you can see that team-a has added an ArgoCD Application defining a Claim for a PostgresSQL database. The manifest of greatest importance is the following:

apiVersion: redhat-cop.redhat.com/v1alpha1

kind: PostgreSQLInstance

metadata:

name: my-db

labels:

team: team-a

spec:

parameters:

region: us-east-2

availabilityZone: us-east-2a

size: small

storage: 20Once this Claim is deployed, Crossplane will start creating the RDS database.

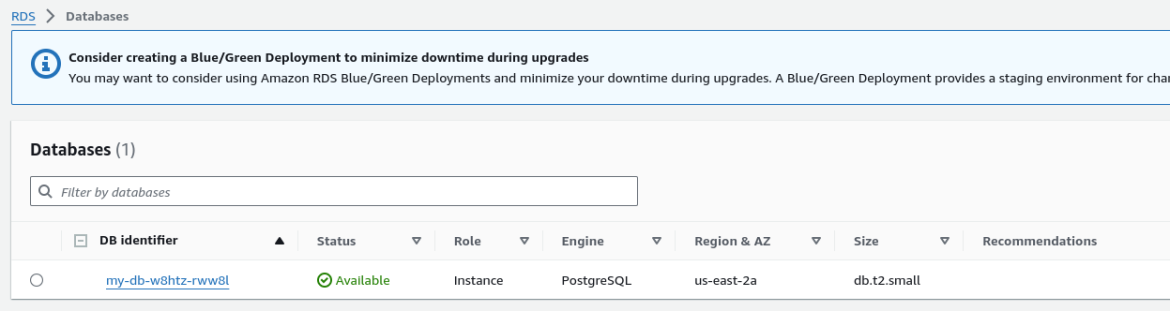

If everything works as expected, we will see an RDS instance in the AWS console, as shown here:

In Vault we should also be able to see the instance root secret similar to the following:

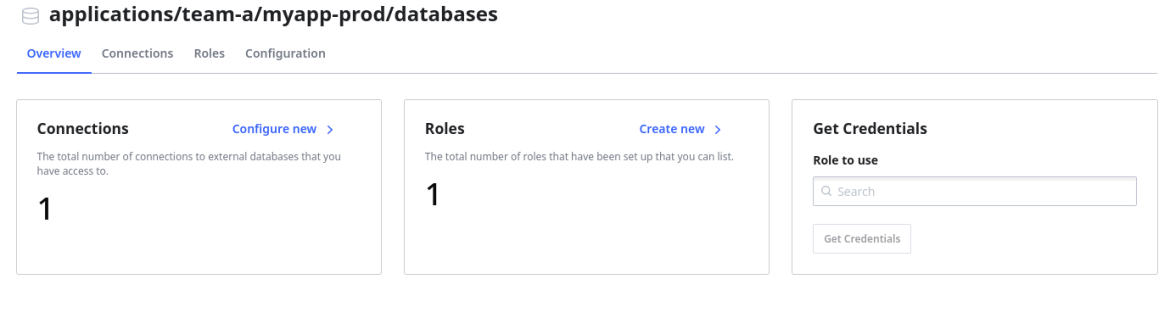

Also, under the tenant database secret path, a Connection and a Role (for this example a read-only Role has been configured):

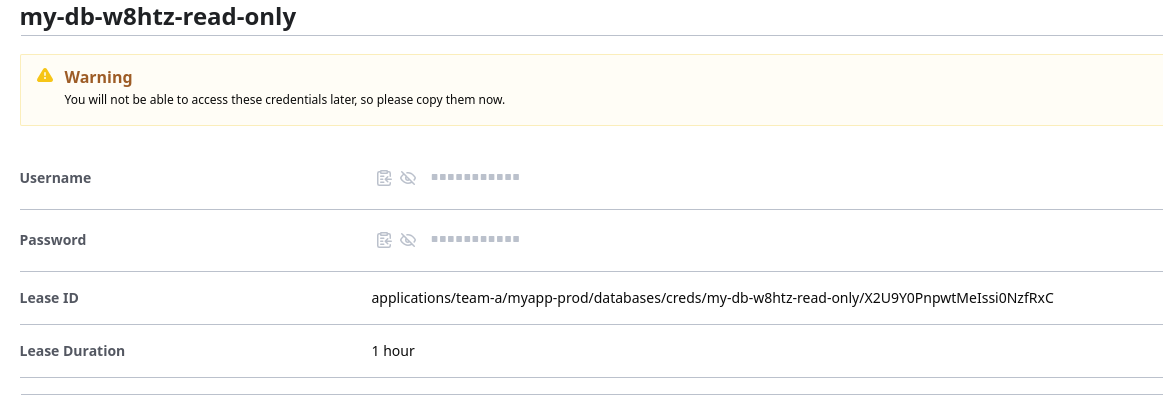

If we generate a secret for the read-only role using the Vault UI, we should see new credentials:

Now let’s do the same from the CLI, pretending to be a workload deployed by the team:

rspazzol@fedora:~$ export jwt=$(oc create token default -n myapp-prod)

rspazzol@fedora:~$ export VAULT_TOKEN=$(vault write auth/prod/login role=secret-reader-team-a-prod jwt=${jwt} -format=json | jq -r .auth.client_token)

rspazzol@fedora:~$ vault read applications/team-a/myapp-prod/databases/creds/my-db-w8htz-read-only

Key Value

--- -----

lease_id applications/team-a/myapp-prod/databases/creds/my-db-w8htz-read-only/p5BFCOlSJMDJ3qqAYkAY2aT0

lease_duration 1h

lease_renewable true

password apOK-tknZ65t3FEM2OW7

username v-prod-mya-my-db-w8-50ZkFabwawS8isPB0PSl-1707238505Conclusions

This article concludes our series on the Environment as a Service (EaaS) use case, at least for now.

Along the way, we discussed how to manage the lifecycle of resources that are external to a Kubernetes cluster, but are still associated with the environment. In addition, we highlighted how to setup access to dynamic credentials for our tenants so that they could connect with these resources

We think that EaaS is one of the foundational use cases for building a Developer Platform and hopefully this series provided you a better understanding of options and approaches for this use case when building a Kubernetes-centric developer platform.

Naturally, there are additional considerations involved when building a developer platform beyond EaaS. For example, topics such as inner-loop, outer-loop and monitoring were not addressed. These areas are ideal topics for future discussions

About the author

Raffaele is a full-stack enterprise architect with 20+ years of experience. Raffaele started his career in Italy as a Java Architect then gradually moved to Integration Architect and then Enterprise Architect. Later he moved to the United States to eventually become an OpenShift Architect for Red Hat consulting services, acquiring, in the process, knowledge of the infrastructure side of IT.

Currently Raffaele covers a consulting position of cross-portfolio application architect with a focus on OpenShift. Most of his career Raffaele worked with large financial institutions allowing him to acquire an understanding of enterprise processes and security and compliance requirements of large enterprise customers.

Raffaele has become part of the CNCF TAG Storage and contributed to the Cloud Native Disaster Recovery whitepaper.

Recently Raffaele has been focusing on how to improve the developer experience by implementing internal development platforms (IDP).

More like this

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Original shows

Entertaining stories from the makers and leaders in enterprise tech

Products

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Cloud services

- See all products

Tools

- Training and certification

- My account

- Developer resources

- Customer support

- Red Hat value calculator

- Red Hat Ecosystem Catalog

- Find a partner

Try, buy, & sell

Communicate

About Red Hat

We’re the world’s leading provider of enterprise open source solutions—including Linux, cloud, container, and Kubernetes. We deliver hardened solutions that make it easier for enterprises to work across platforms and environments, from the core datacenter to the network edge.

Select a language

Red Hat legal and privacy links

- About Red Hat

- Jobs

- Events

- Locations

- Contact Red Hat

- Red Hat Blog

- Diversity, equity, and inclusion

- Cool Stuff Store

- Red Hat Summit